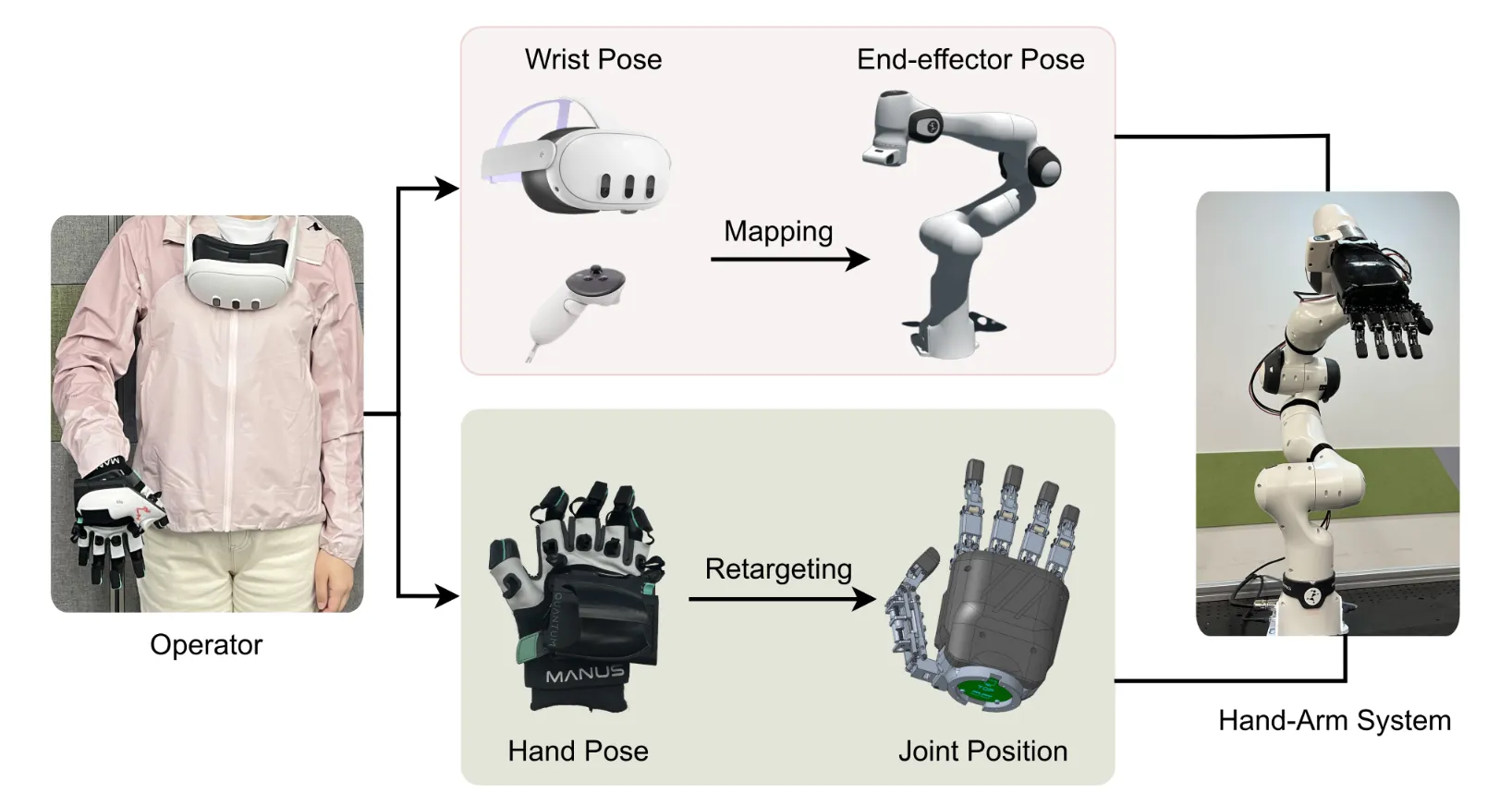

Method

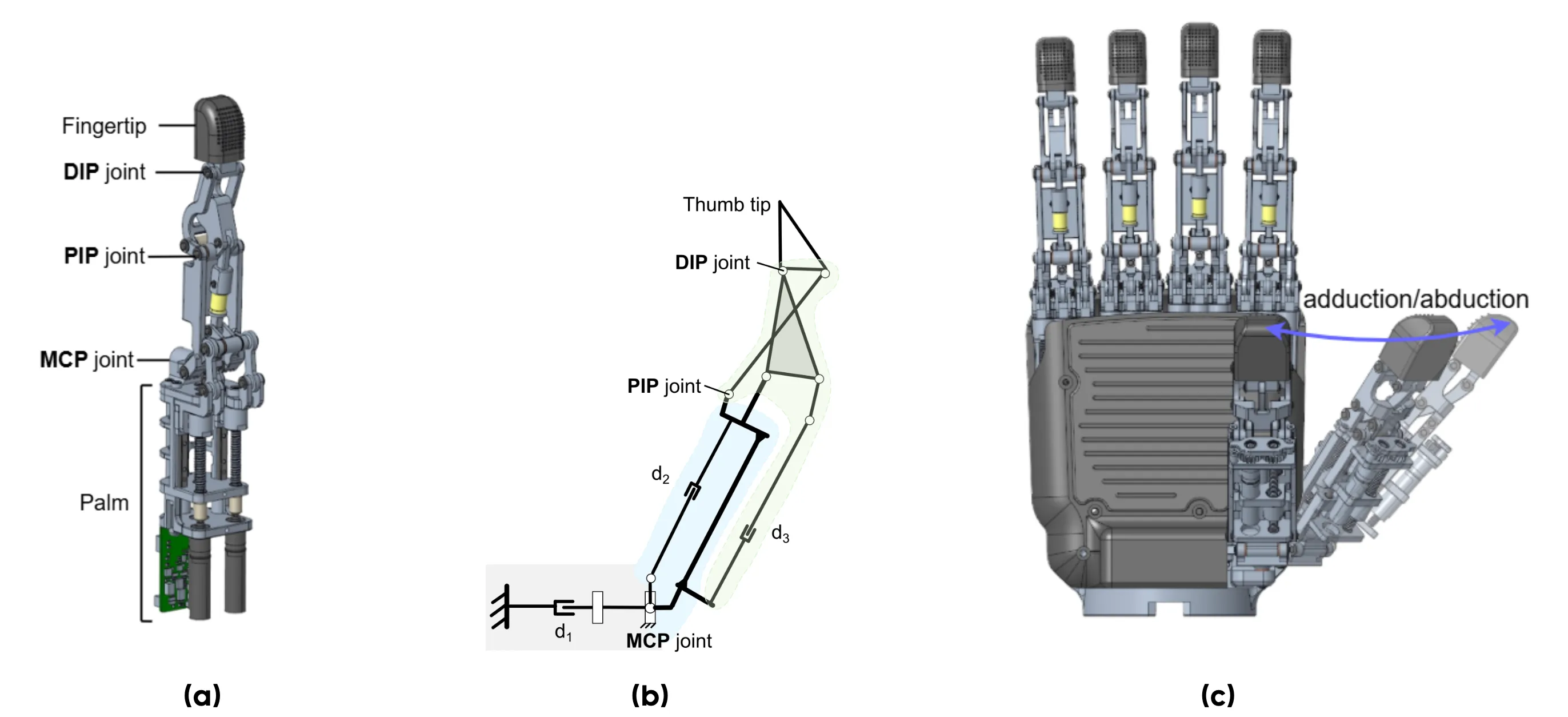

ByteDexter Hand Design

ByteDexter is an anthropomorphic dexterous hand developed by ByteDance Seed. It features 20 degrees of freedom (DoF), with 15 fully actuated DoFs. The seamless integration of 15 motors and embedded boards within the palm enables ByteDexter to function as a standalone hand module. Both the fingertips and palm feature dedicated designs to facilitate the integration of tactile sensors.

ByteDexter’s finger design adopts a parallel-serial kinematic structure: a 2-DoF MCP joint, 1-DoF PIP joint, and passively driven 1-DoF DIP joint replicate the kinematics of human index, middle, ring, and pinky fingers. Its novel thumb design decouples the MCP joint’s two DoFs, expanding the workspace and enhancing precision grasping performance.